🥁 Introduction

This notebook explores the capability of machine learning algorithms to distinguish between essays written by humans and those generated by Large Language models.

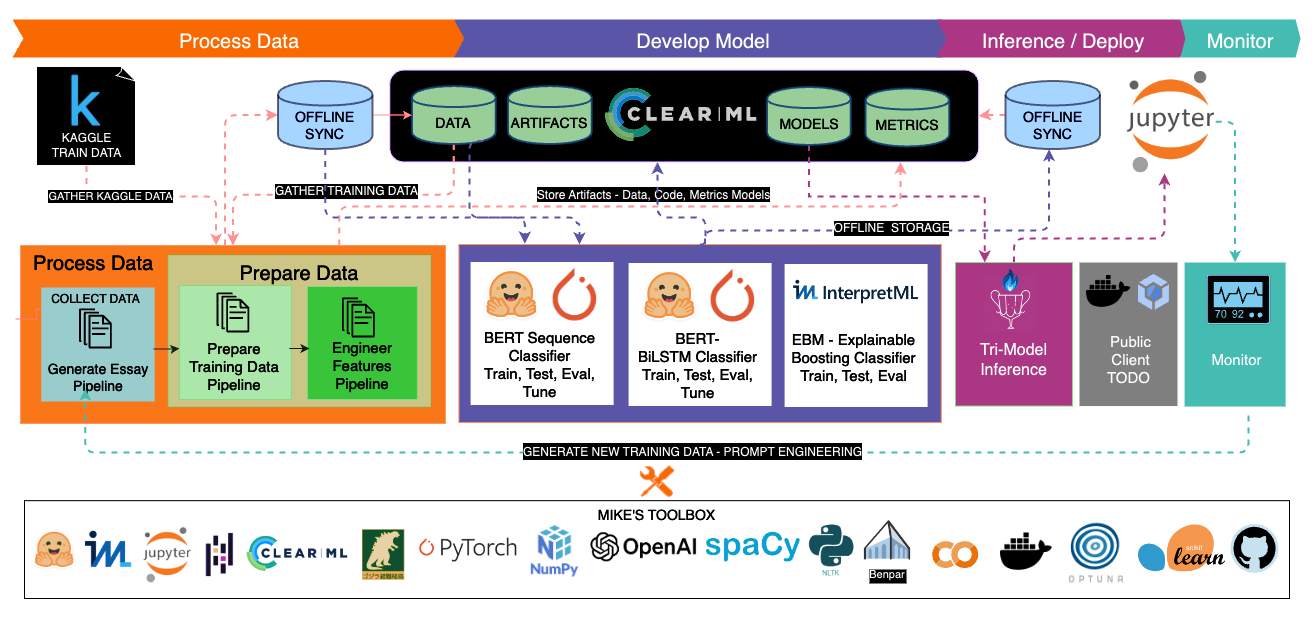

📐 Architecture

Using AWS ❝ Well-Architected Machine Learning Framework ❞ as a Guideline

Key Points:

- Design Philosophy: The architecture is aligned with the AWS 'Well-Architected Machine Learning' framework.

- Benefits:

- Ready to scale up going forward

- Ensures adherence to robust and efficient machine learning practices.

Importance:

- Best Practices: Aligns the project with industry standards in operational excellence, security, reliability, performance efficiency, and cost optimization.

- Future-Proofing: Even without current AWS implementation, this framework lays a strong foundation for potential AWS (and other cloud) integration.

Additional Resources:

- For a deeper understanding, visit AWS Well-Architected Machine Learning Framework.

🎮 Competition

https://www.kaggle.com/competitions/llm-detect-ai-generated-text

"Can you build a model to identify which essay was written by middle and high school students, and which was written using a large language model?"

"perhaps..."

📜 Hypothesis, Motivations and Objective

❈ Hypothesis: *Certain linguistic and structural patterns unique to AI-generated text can be identified and used for classification. We anticipate that our analysis will reveal distinct characteristics in AI-generated essays, enabling us to develop an effective classifier for this purpose.*

Motivations

- Learning and Challenge: Enhancing knowledge in Natural Language Processing (NLP) and staying intellectually active between jobs.

- Competition: https://www.kaggle.com/competitions/llm-detect-ai-generated-text

- Tool Development: Potential creation of a tool to differentiate between human and AI-generated content, useful across various fields.

- Educational Value: Serves as a practical introduction to production models in AI.

Objective

- Model Development: Building an ensemble model that combines two transformer models with an interpretable model like the Explainable Boosting Machine

- Challenges: Balancing effectiveness with interpretability.

- Approach:

- Developing an Explainable Boosting Machine (EBM) that uses custom features to compliment BERT's performance with better interpretability.

♪📕s

Data:

- https://www.kaggle.com/datasets/geraltrivia/llm-detect-gpt354-generated-and-rewritten-essays

- https://www.kaggle.com/datasets/thedrcat/daigt-v2-train-dataset

Models:

- https://www.kaggle.com/code/geraltrivia/ai-human-pytorchbertsequenceclassifier-model

- https://www.kaggle.com/code/geraltrivia/ai-human-pytorchcustombertclassifier-model

note: these are the two models in this notebook. Had to train in another, it's too expensive to run all at once.

Notebooks: This code and the ClearML pipelines including the Essay Generation are here https://github.com/mikewlange/ai-or-human.

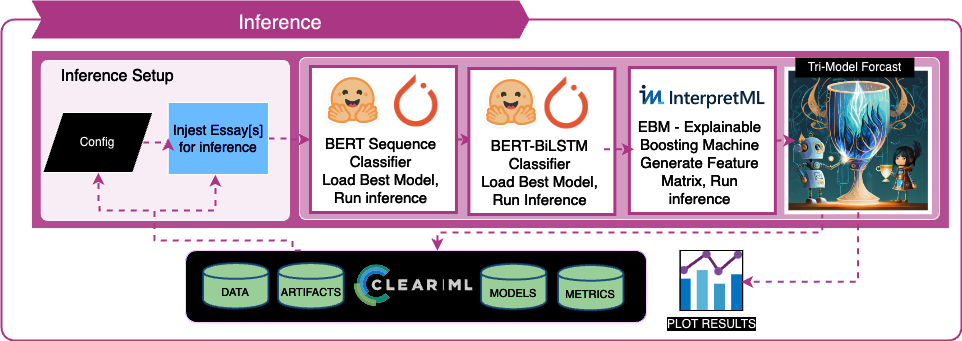

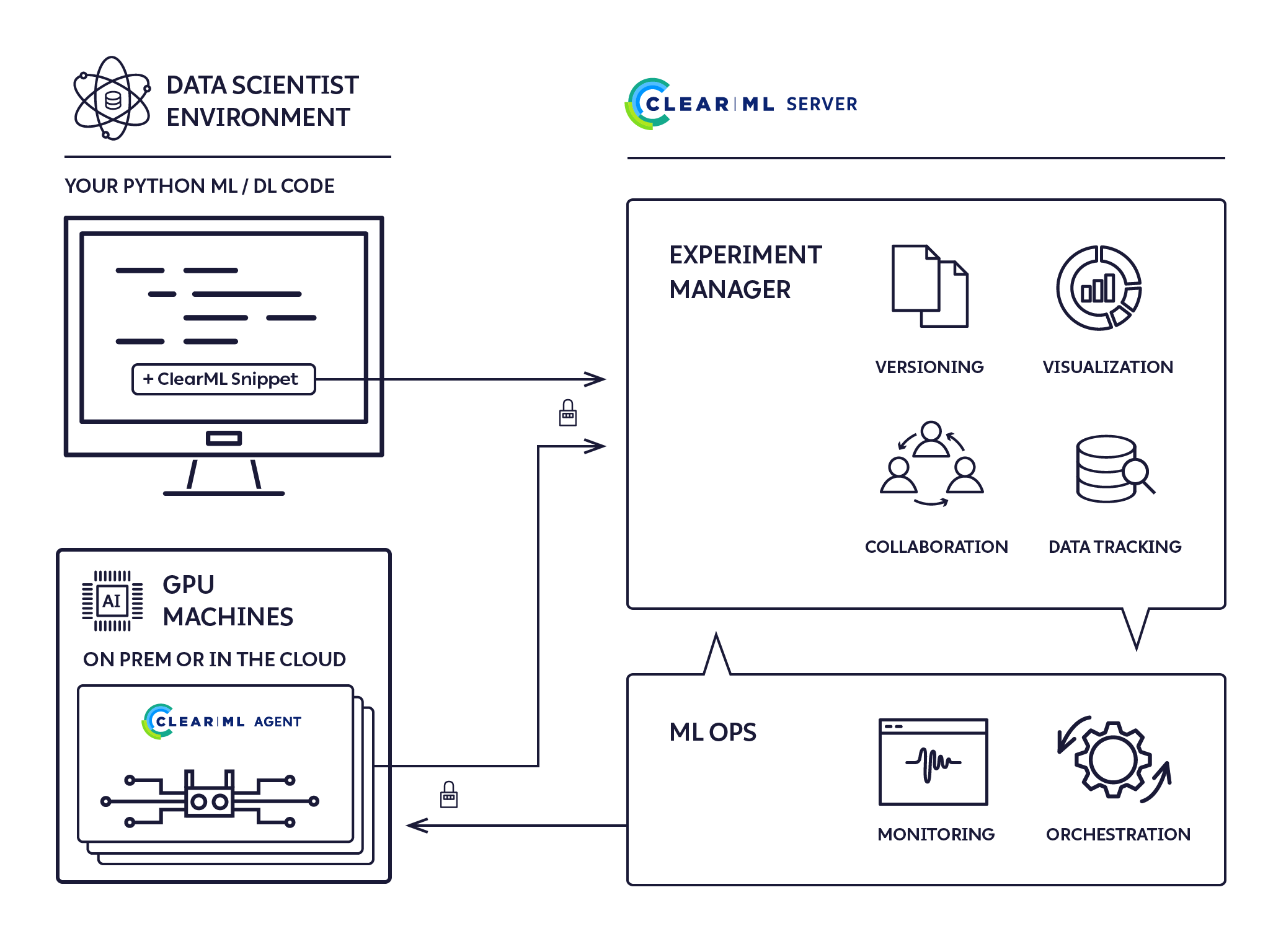

💡 ClearML Integration

"❤" - me

Strategic Choice and Benefits

- Purpose: ClearML integration to enhance workflow efficiency.

- Scalability and Efficiency: Focused on being prepared for scaling, even solo dev projects.

- Positive Experience: My experience with ClearML has been highly beneficial.

Implementation Details

- Pipelines Reference: In this project, all mentions of 'Pipelines' refer to ClearML pipelines.

- Resource Repository: A companion repository contains detailed code and examples for those interested in our ClearML implementation.

Impact of ClearML

- Operational Efficiency: ClearML has significantly improved the project's operational efficiency.

- Learning and Best Practices: It has also served as a platform for learning and applying best practices in ML operations, which is vital for scaling the project.

All the clearml related code is here: https://github.com/mikewlange/ai-or-human., but it's worth putting into the archtecture designs to help you see the whole picture.

✐ Setup for offline run

Kaggle Only

It's possible I'm doing this all wrong. To submit to a contest that disables the internet you neeed to add packages and other code/models that are not installed in the Kaggle docker image into a dataset (I.E storage) and use that as input to your notebook.

# turn off he internet and doing your pip installs, the packages you can't install, add to the libray var below

# TURN ON INTERNET FOR THIS

# creates a wheelhouse to add

#

# library = \

# '''

# textstat

# clearml

# sentence_transformers

# optuna

# interpret

# torchsummary

# empath

# benepar

# '''.lstrip('\n')

# with open('requirements.txt', 'w+') as f:

# f.write(library)

#!mkdir wheelhouse && pip download -r requirements.txt -d wheelhouse

# # Move requrements

# !mv requirements.txt wheelhouse/requirements.txt

## Zip it up and then you can download

# import os

# from zipfile import ZipFile

# dirName = "./"

# zipName = "packages.zip"

# # Create a ZipFile Object

# with ZipFile(zipName, 'w') as zipObj:

# # Iterate over all the files in directory

# for folderName, subfolders, filenames in os.walk(dirName):

# for filename in filenames:

# if (filename != zipName):

# # create complete filepath of file in directory

# filePath = os.path.join(folderName, filename)

# # Add file to zip

# zipObj.write(filePath)

# create a new dataset

# Take that zip file and add it to a dataset + button -> new dataset -> add all you need -> use as input here

#TURN OFF INTERNET

# wipe before any run. test. submission errors are no fun.

#!rm -rf /kaggle/working/*

# !cp -r /kaggle/input/pip-installs/wheelhouse /kaggle/working/

# !cp -r /kaggle/input/pip-installs/benepar_en3 /kaggle/working/

# !pip install --no-index --find-links=/kaggle/working/wheelhouse /kaggle/working/wheelhouse/benepar-0.2.0/benepar-0.2.0

# import sys

# sys.path.append("/kaggle/input/pip-installs/wheelhouse/sentence-transformers-2.2.2/sentence-transformers-2.2.2")

# import sentence_transformers

# sys.path.append("/kaggle/input/pip-installs/wheelhouse/empath-0.89/empath-0.89")

# from empath import Empath

# # Creating this in realtime just in case we have to add-remove.

# requirements = """

# textstat

# clearml

# optuna

# interpret

# torchsummary

# """

# with open('/kaggle/working/requirements.txt', 'w') as f:

# f.write(requirements)

# ## install

# !pip install -r /kaggle/working/requirements.txt --no-index --find-links /kaggle/input/pip-installs/wheelhouse

# !pip install --no-index --find-links=/kaggle/working/wheelhouse torchsummary

# # ## Prepare Benepar

# import sys

# import spacy

# import benepar

# import torchsummary

# # fixes ealier issue

# sys.path.insert(0, '/kaggle/working/')

# nlp = spacy.load('en_core_web_lg')

# nlp.add_pipe("benepar", config={"model": "benepar_en3"})

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import os

import random

import torch

import logging

from clearml.automation.controller import PipelineDecorator

from clearml import TaskTypes, PipelineController, StorageManager, Dataset, Task

from clearml import InputModel, OutputModel

from IPython.display import display

import ipywidgets as widgets

from tqdm import tqdm

import time

import pickle

import markdown

from bs4 import BeautifulSoup

import re

import nltk

from nltk.tokenize import word_tokenize

from nltk.stem import WordNetLemmatizer

torch.manual_seed(42)

if torch.cuda.is_available():

torch.cuda.manual_seed(42)

torch.backends.cudnn.deterministic = True

torch.backends.cudnn.benchmark = False

# Set random seed for NumPy

np.random.seed(42)

# Set random seed for random module

random.seed(42)

#os.environ['OPENAI_API_KEY'] =

class CFG:

DEVICE = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

CLEAR_ML_TRAINING_DATASET_ID = 'e71bc7e41b114a549ac1eaf1dff43099'

CLEAR_ML_KAGGLE_TRAIN_DATA = '24596ea241c34c6eb5013152a6122e48'

CLEAR_ML_AI_GENERATED_ESSAYS = '593fff56e3784e4fbfa4bf82096b0127'

CLEAR_ML_AI_REWRITTEN_ESSAYS = '624315dd0e9b4314aa266654ebd71918'

DATA_ETL_STRATEGY = 1

TRAINING_DATA_COUNT = 50000

CLEARML_OFFLINE_MODE = False

CLEARML_ON = False

KAGGLE_INPUT = '/kaggle/input'

SCRATCH_PATH = 'scratch'

ARTIFACTS_PATH = 'artifacts'

TRANSFORMERS_PATH = 'benepar'

ENSAMBLE_STRATEGY = 2

KAGGLE_RUN = False

SUBMISSION_RUN = True

EXPLAIN_CODE=False

BERT_MODEL = 'bert-base-uncased'

EBM_ONLY = False

RETRAIN=True

cfg_dict = {key: value for key, value in CFG.__dict__.items() if not key.startswith('__')}

feature_list = list()

import clearml

class ClearMLTaskHandler:

def __init__(self, project_name, task_name, config=None):

self.task = self.get_or_create_task(project_name, task_name)

self.logger = None # Initialize logger attribute

self.setup_widget_logger()

if config:

self.set_config(config)

def get_or_create_task(self, project_name, task_name):

try:

tasks = []

if(CFG.CLEARML_OFFLINE_MODE):

Task.set_offline(offline_mode=True)

else:

tasks = Task.get_tasks(project_name=project_name, task_name=task_name)

if tasks:

if(tasks[0].get_status() == "created" and task[0].task_name == task_name):

task = tasks[0]

return task

else:

if(CFG.CLEARML_OFFLINE_MODE):

Task.set_offline(offline_mode=True)

task = Task.init(project_name=project_name, task_name=task_name)

return task

else:

if(CFG.CLEARML_OFFLINE_MODE):

Task.set_offline(offline_mode=True)

task = Task.init(project_name=project_name, task_name=task_name)

else:

task = Task.init(project_name=project_name, task_name=task_name)

return task

except Exception as e:

print(f"Error occurred while searching for existing task: {e}")

return None

def set_parameters(self, parameters):

"""

Set hyperparameters for the task.

:param parameters: Dictionary of parameters to set.

"""

self.task.set_parameters(parameters)

def set_config(self, config):

if isinstance(config, dict):

self.task.connect(config)

elif isinstance(config, argparse.Namespace):

self.task.connect(config.__dict__)

elif isinstance(config, (InputModel, OutputModel, type, object)):

self.task.connect_configuration(config)

else:

logging.warning("Unsupported configuration type")

def log_data(self, data, title):

self.task.get_logger()

if isinstance(data, np.ndarray):

self.task.get_logger().report_image(title, 'array', iteration=0, image=data)

elif isinstance(data, pd.DataFrame):

self.task.get_logger().report_table(title, 'dataframe', iteration=0, table_plot=data)

elif isinstance(data, str) and os.path.exists(data):

self.task.get_logger().report_artifact(title, artifact_object=data)

else:

self.task.get_logger().report_text(f"{title}: {data}")

def upload_artifact(self, name, artifact):

"""

Upload an artifact to the ClearML server.

:param name: Name of the artifact.

:param artifact: Artifact object or file path.

"""

self.task.upload_artifact(name, artifact_object=artifact)

def get_artifact(self, name):

"""

Retrieve an artifact from the ClearML server.

:param name: Name of the artifact to retrieve.

:return: Artifact object.

"""

return self.task.artifacts[name].get()

def setup_widget_logger(self):

handler = OutputWidgetHandler()

handler.setFormatter(logging.Formatter('%(asctime)s - [%(levelname)s] %(message)s'))

self.logger = logging.getLogger() # Create a new logger instance

self.logger.addHandler(handler)

self.logger.setLevel(logging.INFO)

# Just in case we can't use clearml in kaggle

class OutputWidgetHandler(logging.Handler):

def __init__(self, *args, **kwargs):

super(OutputWidgetHandler, self).__init__(*args, **kwargs)

layout = {'width': '100%', 'border': '1px solid black'}

self.out = widgets.Output(layout=layout)

def emit(self, record):

formatted_record = self.format(record)

new_output = {'name': 'stdout', 'output_type': 'stream', 'text': formatted_record+'\n'}

self.out.outputs = (new_output, ) + self.out.outputs

def show_logs(self):

display(self.out)

def clear_logs(self):

self.out.clear_output()

# Keeping this out for simpicity

def upload_dataset_from_dataframe(dataframe, new_dataset_name, dataset_project, description="", tags=[], file_name="dataset.pkl"):

from pathlib import Path

from clearml import Dataset

import pandas as pd

import logging

try:

print(dataframe.head())

file_path = Path(file_name)

pd.to_pickle(dataframe, file_path)

new_dataset = Dataset.create(new_dataset_name,dataset_project, description=description)

new_dataset.add_files(str(file_path))

if description:

new_dataset.set_description(description)

if tags:

new_dataset.add_tags(tags)

new_dataset.upload()

new_dataset.finalize()

return new_dataset

except Exception as e:

return logging.error(f"Error occurred while uploading dataset: {e}")

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

( ͡° ͜ʖ ͡°) 𝖘𝖍𝖆K𝖊𝖘𝖕𝖊𝖆𝖗𝖊 𝖜𝖗o𝖙𝖊 𝖈o𝖉𝖊

⇟ Just toss explain_code(_i) into your cell, and voilà – it's like touching

a hot stove.

more info ⇟

[8] wikipedia

𝖘𝖍𝖆K𝖊𝖘𝖕𝖊𝖆𝖗𝖊?

- Deep Engagement: It encourages you to slow down and deeply engage with the content.

- Language Skill Enhancement: Instantly boosts your language skills. :)

- Programming and Language: Highlights the fact that programming is more about language than number crunching.

- Research Backing: Studies indicate that factors like fluid reasoning and language aptitude are crucial in understanding programming languages. [1]

- First Time: there is a good chance you've never read code explained like this.

𝖘𝖊T𝖚𝖕

- Config Section Update:

- Set your OpenAI API key:

os.environ['OPENAI_API_KEY'] = 'your_key'. - In the CFG object, set

EXPLAIN_CODE=True.

- Set your OpenAI API key:

- Code Explanation:

- Add

explain_code(_i)at the end of complex code cells.

- Add

- Execution:

- Run the cell and prepare for both enlightenment and a bit of humor.

from IPython.display import display, Markdown

import ipywidgets as widgets

from openai import OpenAI

client = OpenAI()

model = "gpt-4-1106-preview"

max_chars = 500

def query_openai_api(model, cell_contents, max_chars=500):

content = "" # Initialize the content variable

stream = client.chat.completions.create(

model=model,

response_format={"type": "text"},

messages=[

{"role": "system", "content": cell_contents },

{"role": "user", "content": "Analyze the code in the system message. Give a 3 sentence brief. Use a mild shakespearean tone based on a random charater from one of shakespears plays . Then, display the rest of explanation as a Markdown bullet list. Do not use a greetings. Focus on brevity and clarity. "}

],

max_tokens=max_chars,

temperature=0.7,

stream=True,

)

content = ""

display(Markdown(content))

for chunk in stream:

content += chunk.choices[0].delta.content or ""

if chunk.choices[0].finish_reason == "stop":

break

display(Markdown(content))

def explain_code(cell_contents):

loading_icon = widgets.HTML(value="")

loading_icon.layout.display = "none" # Hide the loading icon initially

output = widgets.Output()

def on_button_click(b):

with output:

loading_icon.layout.display = "block" # Show the loading icon

query_openai_api(model, cell_contents, max_chars)

loading_icon.layout.display = "none" # Hide the loading icon

button = widgets.Button(description="UNRAVEL MYSTERY", tooltip="Click to explain the code in this cell using gpt-4-1106-preview")

button.style.font_weight = "bold"

hbox = widgets.HBox([button, loading_icon])

button.background_color = "#05192D"

button.button_style= "primary"

button.style.width = "700px"

button.on_click(on_button_click)

display(hbox)

display(output)

if(CFG.EXPLAIN_CODE):

explain_code(_i)

🤣 𝕰x𝖆M𝖕l𝖊 ↑ 🤣

❝ Verily, the script before us, a modern parchment, doth invoke the spirits of computation to unravel the mysteries enfolded within its characters. Like Prospero's conjuring of airs and whispers, this code beckons forth answers from the aether, seeking knowledge with a sprite's swiftness. Yet not with charms or spells, but with the silent tongues of Python and OpenAI's grand oracle, it reveals its secrets. ❞

- The script creates an interactive widget within a Jupyter notebook that allows users to submit Python code for analysis.

- Upon clicking the "UNRAVEL MYSTERY" button, the code is sent to the OpenAI API, which uses the GPT-4 model to generate an explanation of the provided code snippet.

- The explanation is then displayed within the notebook, styled in Markdown for ease of reading.

- The function query_openai_api is responsible for communicating with the OpenAI API, handling the response, and formatting it as Markdown content.

- A loading icon is displayed while the API processes the request, providing visual feedback that the operation is in progress.

- The explain_code function sets up the interactive components, including the button and output area, and handles the button click event.

- The on_button_click function is the event handler that triggers the API call and manages the display of the loading icon and output.

Note on Functionality

- Current Limitation: Presently, it works on a 'load-and-reveal' basis - I want it to stream.

- Future Updates: Would like to get the streaming to work. :)

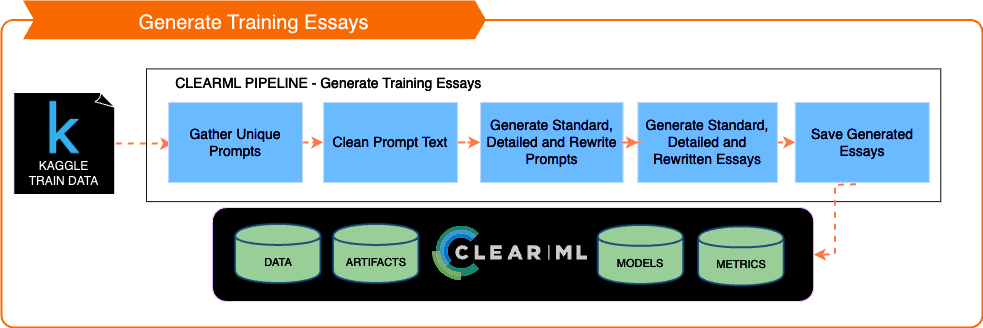

➠ Framework for Generating Essays

Quick overview. What are some ways students might use LLM's to conceal the origin of the essays?

- Simple Topic Essay Nothing fancy, simple instructions and the prompt

- Getting Creative Now we need to build a prompt that will fool the baseline model.

- Occasional grammatical errors: Introduce minor grammatical mistakes that a student might make under exam conditions or in a final draft, such as slight misuse of commas, or occasional awkward phrasing.

- Varying sentence structure: Use a mix of simple, compound, and complex sentences, with some variation in fluency to reflect a student's developing writing style.

- Personal touch: Include personal opinions, anecdotes, or hypothetical examples where appropriate, to give the essay a unique voice.

- Argument depth: While the essay should be well-researched and informed, the depth of argument might not reach the sophistication of a more experienced writer. Arguments should be sound but might lack the nuance a more advanced writer would include.

- Conclusion: Ensure the essay has a clear conclusion, but one that might not fully encapsulate all the complexities of the topic, as a student might struggle to tie all threads together neatly.

Remember, the goal is to create a piece that balances high-quality content with the authentic imperfections of a human student writer. The essay should be on the following topic: + prompt

- Rewrite Prompt That is where I would get caught. I love using AI to assist with rewrites and grammar checks.

Prompts were used from a competition dataset. https://www.kaggle.com/datasets/alejopaullier/daigt-external-dataset (original_moth)

The code for generating the essays is here: https://github.com/mikewlange/ai-or-human/blob/main/generate_essays_pipeline.ipynb

if(CFG.CLEARML_ON):

clearml_handler = ClearMLTaskHandler(

project_name='LLM-detect-ai-gen-text-LIVE/dev/notebook/preprocess',

task_name='Load Data and Generate Features'

)

clearml_handler.set_parameters({'etl_strategy': cfg_dict['DATA_ETL_STRATEGY'], 'train_data_count': cfg_dict['TRAINING_DATA_COUNT']})

clearml_handler.set_config(cfg_dict)

task = clearml_handler.task

def download_dataset_as_dataframe(dataset_id='593fff56e3784e4fbfa4bf82096b0127', file_name="ai_generated.pkl"):

import pandas as pd

# import Dataset from clearml

from clearml import Dataset

dataset = Dataset.get(dataset_id, only_completed=True)

cached_folder = dataset.get_local_copy()

for file_name in os.listdir(cached_folder):

if file_name.endswith('.pkl'):

file_path = os.path.join(cached_folder, file_name)

dataframe = pd.read_pickle(file_path)

return dataframe

raise FileNotFoundError("No PKL file found in the dataset.")

def download_dataset_as_dataframe_csv(dataset_id='593fff56e3784e4fbfa4bf82096b0127', file_name="ai_generated_essays.csv"):

import pandas as pd

# import Dataset from clearml

extension = file_name.split('.')[-1]

from clearml import Dataset

dataset = Dataset.get(dataset_id, only_completed=True)

cached_folder = dataset.get_local_copy()

for file_name in os.listdir(cached_folder):

if file_name.endswith(extension):

file_path = os.path.join(cached_folder, file_name)

dataframe = pd.read_csv(file_path)

return dataframe

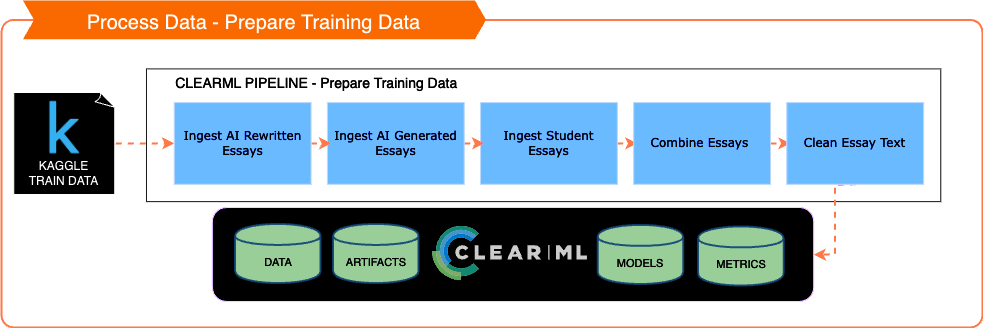

kaggle_training_data = download_dataset_as_dataframe_csv(dataset_id=CFG.CLEAR_ML_KAGGLE_TRAIN_DATA,file_name="train_v2_drcat_02__final.csv")[['text','label','source']]

ai_generated_essays = download_dataset_as_dataframe(dataset_id=CFG.CLEAR_ML_AI_GENERATED_ESSAYS,file_name="ai_generated.pkl")[['text','label','source']]

ai_rewritten_essays = download_dataset_as_dataframe(dataset_id=CFG.CLEAR_ML_AI_REWRITTEN_ESSAYS,file_name="ai_rewritten_essays.pkl")[['text','label','source']]

random_kaggle_training_data = kaggle_training_data[kaggle_training_data['label'] == 1].sample(n=10000) # from kaggle dataset

random_generated_training_data = ai_generated_essays[ai_generated_essays['label'] == 1].sample(n=10000) # via the essay generation pipelint gpt3.5-4 essays written by AI and rewrittn

kaggle_training_student = kaggle_training_data[kaggle_training_data['label'] == 0].sample(n=12000)

random_kaggle_training_data = random_kaggle_training_data.dropna(subset=['text'])

random_generated_training_data = random_generated_training_data.dropna(subset=['text'])

kaggle_training_student = kaggle_training_student.dropna(subset=['text'])

combined_data = pd.concat([random_generated_training_data,random_kaggle_training_data, kaggle_training_student], ignore_index=True)

df_combined = combined_data.reset_index(drop=True)

df_combined.drop_duplicates(inplace=True)

df_essays = df_combined[['text', 'label', 'source']].copy()

sample = int(CFG.TRAINING_DATA_COUNT / 2)

df_label_0 = df_essays[df_essays['label'] == 0].sample(n=2000, random_state=42)

df_label_1 = df_essays[df_essays['label'] == 1].sample(n=2000, random_state=42) #<- example of data leakage

combined_df = pd.concat([df_label_1, df_label_0], ignore_index=True)

combined_df = combined_df.dropna()

df_essays = combined_df.reset_index(drop=True)

import plotly.graph_objects as go

import matplotlib.pyplot as plt

def plot_label_distribution(df_essays, plots, task=None):

if(plots == 1):

label_1_counts = df_essays[df_essays['label'] == 1].groupby('source').size()

label_0_counts = df_essays[df_essays['label'] == 0].groupby('source').size()

data=[

go.Bar(name='Label 0', x=label_0_counts.index, y=label_0_counts.values),

go.Bar(name='Label 1', x=label_1_counts.index, y=label_1_counts.values)

]

fig1 = go.Figure(data=data)

fig1.update_layout(

title='Counts of Label 0 and Label 1 per Source',

xaxis_title='Source',

yaxis_title='Count',

barmode='group'

)

if(CFG.CLEARML_ON):

task.get_logger().report_plotly(title="Counts of Label 0 and Label 1 per Source", series="data", figure=fig1)

# Show the chart using Plotly

fig1.show()

label_counts = df_essays['label'].value_counts().sort_index()

print("Label Counts:")

for label, count in label_counts.items():

print(f"Label {label}: {count}")

plt.bar(['0', '1'], label_counts.values)

plt.xlabel('label')

plt.ylabel('Count')

plt.title('Distribution of label Values in df_essays')

plt.show()

plot_label_distribution(df_essays,1)

if(CFG.EXPLAIN_CODE):

explain_code(_i)

INFO:matplotlib.category:Using categorical units to plot a list of strings that are all parsable as floats or dates. If these strings should be plotted as numbers, cast to the appropriate data type before plotting.

INFO:matplotlib.category:Using categorical units to plot a list of strings that are all parsable as floats or dates. If these strings should be plotted as numbers, cast to the appropriate data type before plotting.

Label Counts:

Label 0: 2000

Label 1: 2000

logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(levelname)s - %(message)s')

def pipeline_preprocess_text(df):

PUNCTUATION_TO_RETAIN = '.?!,'

def preprocess_pipeline(text):

try:

# Remove markdown formatting

html = markdown.markdown(text)

text = BeautifulSoup(html, features="html.parser").get_text()

text = re.sub(r'[\n\r]+', ' ', text)

text = ' '.join(text.split())

text = re.sub(r'^(?:Task(?:\s*\d+)?\.?\s*)?', '', text)

text = re.sub('\n+', '', text)

text = re.sub(r'[A-Z]+_[A-Z]+', '', text)

punctuation_to_remove = r'[^\w\s' + re.escape(PUNCTUATION_TO_RETAIN) + ']'

text = re.sub(punctuation_to_remove, '', text)

tokens = word_tokenize(text)

lemmatizer = WordNetLemmatizer()

lemmatized_tokens = [lemmatizer.lemmatize(token) for token in tokens]

return ' '.join(lemmatized_tokens)

except Exception as e:

logging.error(f"Error in preprocess_pipeline: {e}")

return text

tqdm.pandas()

start_time = time.time()

df['text'] = df['text'].progress_apply(preprocess_pipeline)

end_time = time.time()

print(f"Preprocessing completed in {end_time - start_time:.2f} seconds")

return df

df_essays = pipeline_preprocess_text(df_essays)

if(CFG.CLEARML_ON):

plot_label_distribution(df_essays, 0, task=clearml_handler.task)

clearml_handler.task.upload_artifact(f'df_essays_train_preprocessed_{CFG.DATA_ETL_STRATEGY}', artifact_object=df_essays)

clearml_handler.task.get_logger().report_table(title='df_essays_train_preprocessed_',series='Train Essays Cleaned',

iteration=0,table_plot=df_essays)

if(CFG.EXPLAIN_CODE):

explain_code(_i)

0%| | 0/4000 [00:00<?, ?it/s]

100%|██████████| 4000/4000 [00:24<00:00, 160.03it/s]

Preprocessing completed in 25.01 seconds

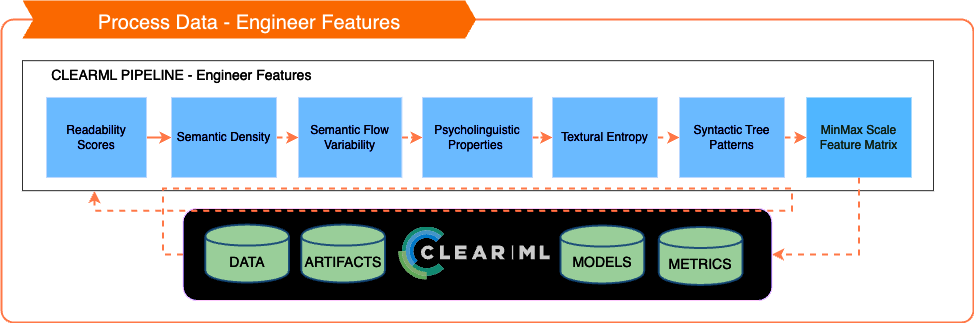

⁖ 📲 Engineer Features

Key Analytical Areas

-

Readability Scores:

- Identifying unique patterns in AI vs. human-written essays.

- Analysis using scores like

Flesch-Kincaid Grade Level,Gunning Fog Index, etc.

-

Semantic Density:

- Understanding the concentration of meaning-bearing words in AI-generated vs. human text.

-

Semantic Flow Variability:

- Examining idea transitions between sentences in human and AI-generated texts.

-

Psycholinguistic Features:

- Using the LIWC tool for psychological and emotional content evaluation.

-

Textual Entropy:

- Measuring unpredictability or randomness, focusing on differences between AI and human content.

-

Syntactic Tree Patterns:

- Parsing essays to analyze syntactic tree patterns, especially structural tendencies in language models.

Ethical Considerations

- Content Bias: Ensuring to avoid generating discriminative content bias, focusing mainly on stat features.

- Potential Bias in Tools: Considering if tools like LIWC (empath) and the Readability Scores might introduce bias.

Note: These considerations are essential in maintaining the integrity and fairness of our analysis.

📊 Feature Distribution Statistics

Example:

Understanding Key Statistical Concepts

-

T-Test p-value:

- Purpose: Determines if differences between groups are statistically significant.

- Interpretation: A low p-value (< 0.05) suggests significant differences, challenging the null hypothesis.

-

Mann-Whitney U p-value:

- Usage: Ideal for non-normally distributed data, comparing two independent samples.

- Significance: Similar to the T-test, a lower p-value indicates notable differences between the groups.

-

Kruskal-Wallis p-value:

- Application: Used for comparing more than two independent samples.

- Meaning: A low p-value implies significant variance in at least one of the samples from the others.

-

Cohen's d:

- Function: Measures the standardized difference between two means.

- Values: Interpreted as small (0.2), medium (0.5), or large (0.8) effects.

-

Glass's delta:

- Comparison with Cohen's d: Similar in purpose but uses only the standard deviation of one group for normalization.

- Utility: Effective when the groups' standard deviations differ significantly.

Note on Sample Size and Statistical Tests

- Small Samples (Under 5000 Records): T-Test, Mann-Whitney U, and Kruskal-Wallis tests are effective.

- Large Samples (Over 5000 Records): Focus on effect sizes (Cohen's d and Glass's delta), as p-values will generally approach 0.

def plot_feature_distribution(df_essays, categories_to_plot, show_plot=True):

import plotly.graph_objects as go

import pandas as pd

import numpy as np

from scipy import stats

def cohens_d(x, y):

nx, ny = len(x), len(y)

dof = nx + ny - 2

return (np.mean(x) - np.mean(y)) / np.sqrt(((nx - 1) * np.std(x, ddof=1) ** 2 + (ny - 1) * np.std(y, ddof=1) ** 2) / dof)

def glass_delta(x, y):

return (np.mean(x) - np.mean(y)) / np.std(x, ddof=1)

for category in categories_to_plot:

df_filtered = df_essays[df_essays[category].astype(float) > 0]

generated_0 = df_filtered[df_filtered["label"] == 0][category].astype(float)

generated_1 = df_filtered[df_filtered["label"] == 1][category].astype(float)

# Statistical tests and effect size calculations

ttest_results = stats.ttest_ind(generated_0, generated_1, equal_var=False)

d_value = cohens_d(generated_0, generated_1)

delta_value = glass_delta(generated_0, generated_1)

u_statistic, p_value = stats.mannwhitneyu(generated_0, generated_1, alternative="two-sided")

k_statistic, p_value_k = stats.kruskal(generated_0, generated_1)

# Log scalar metrics

# logger.report_scalar(title="T-Test p-value", series=category, value=ttest_results.pvalue, iteration=0)

# logger.report_scalar(title="Cohen's d", series=category, value=d_value, iteration=0)

# logger.report_scalar(title="Glass's delta", series=category, value=delta_value, iteration=0)

annotations = (

f"<b>T-Test p-value:</b> {ttest_results.pvalue:.2e} <b> Mann-Whitney U p-value:</b> {p_value:.2e}<br>"

f"<b>Kruskal-Wallis p-value:</b> {p_value_k:.2e} <b> Cohen's d:</b>{d_value:.2f} <b> Glass's delta:</b>{delta_value:.2f}<br><br><br>"

)

fig = go.Figure()

data=[

go.Histogram(x=generated_0, name='Student', opacity=0.6),

go.Histogram(x=generated_1, name='AI', opacity=0.6)

]

fig.add_trace(data[0])

fig.add_trace(data[1])

condition = False

if (delta_value < -0.50) or (delta_value > 0.5):

condition = True

txt = 'title'

if condition:

title_text = f'<b>Distribution of {category.capitalize()}<span style="font-size: 30px; color: gold;">★</span></b>'

feature_list.append(category)

else:

title_text = f'<b>Distribution of {category.capitalize()}</b>'

fig.update_layout(

barmode='overlay',

title_text=title_text,

xaxis_title=f"{category.capitalize()}",

yaxis_title="Density",

annotations=[dict(

text=annotations,

x=.01,

y=-.25,

xref="paper",

yref="paper",

align="left",

showarrow=False,

bordercolor="#000000",

borderwidth=.3

)],

legend=dict(

orientation="v",

x=1.02,

y=1.0,

bgcolor="rgba(254, 255, 255, 0.5)",

bordercolor="#000000",

borderwidth=.3

),

margin=dict(l=100, r=100, b=100)

)

if(show_plot):

fig.show()

if(CFG.CLEARML_ON):

Task.current_task().get_logger().report_plotly(title=f"{category.capitalize()}", series="data", figure=fig)

Task.current_task().get_logger().report_single_value("T-Test p-value: ",ttest_results.pvalue)

Task.current_task().get_logger().report_single_value("Mann-Whitney U p-value: ",p_value)

Task.current_task().get_logger().report_single_value("Cohen's d: ",d_value)

Task.current_task().get_logger().report_single_value("Glass's delta:",delta_value)

⁖ 📲 Features

ƒ(①) Readability Scores

➠ Flesch-Kincaid Grade Level

This test gives a U.S. school grade level; for example, a score of 8 means that an eighth grader can understand the document. The lower the score, the easier it is to read the document. The formula for the Flesch-Kincaid Grade Level (FKGL) is:

$ FKGL = 0.39 \left( \frac{\text{total words}}{\text{total sentences}} \right) + 11.8 \left( \frac{\text{total syllables}}{\text{total words}} \right) - 15.59 $

Source: Wikipedia

➠ Gunning Fog Index

The Gunning Fog Index is a readability test designed to estimate the years of formal education a person needs to understand a text on the first reading. The index uses the average sentence length (i.e., the number of words divided by the number of sentences) and the percentage of complex words (words with three or more syllables) to calculate the score. The higher the score, the more difficult the text is to understand.

$ GunningFog = 0.4 \left( \frac{\text{words}}{\text{sentences}} + 100 \left( \frac{\text{complex words}}{\text{words}} \right) \right) $

In this formula:

- "Words" is the total number of words in the text.

- "Sentences" is the total number of sentences in the text.

- "Complex words" are words with three or more syllables, not including proper nouns, familiar jargon or compound words, or common suffixes such as -es, -ed, or -ing as a syllable.

The Gunning Fog Index is particularly useful for ensuring that texts such as technical reports, business communications, and journalistic works are clear and understandable for the intended audience.

Source: Wikipedia

➠ Coleman-Liau Index

The Coleman-Liau Index is a readability metric that estimates the U.S. grade level needed to comprehend a text. Unlike other readability formulas, it relies on characters instead of syllables per word, which can be advantageous for processing efficiency. The index is calculated using the average number of letters per 100 words and the average number of sentences per 100 words.

$ CLI = 0.0588 \times L - 0.296 \times S - 15.8 $

Where:

- L is the average number of letters per 100 words.

- S is the average number of sentences per 100 words.

Source: Wikipedia

➠ SMOG Index

The SMOG (Simple Measure of Gobbledygook) Index is a measure of readability that estimates the years of education needed to understand a piece of writing. It is calculated using the number of polysyllable words and the number of sentences. The SMOG Index is considered accurate for texts intended for consumers.

$ SMOG = 1.043 \times \sqrt{M \times \frac{30}{S}} + 3.1291 $

- M is the number of polysyllable words (words with three or more syllables).

- S is the number of sentences.

Source: Wikipedia

➠ Automated Readability Index (ARI)

The Automated Readability Index is a readability test designed to gauge the understandability of a text. The formula outputs a number that approximates the grade level needed to comprehend the text. The ARI uses character counts, which makes it suitable for texts with a standard character-per-word ratio.

$ ARI = 4.71 \times \left( \frac{\text{characters}}{\text{words}} \right) + 0.5 \times \left( \frac{\text{words}}{\text{sentences}} \right) - 21.43 $

Where:

- The number of characters is divided by the number of words.

- The number of words is divided by the number of sentences.

Source: wikipedia

➠ Dale-Chall Readability Score

The Dale-Chall Readability Score is unique in that it uses a list of words that are familiar to fourth-grade American students. The score indicates how many years of schooling someone would need to understand the text. If the text contains more than 5% difficult words (words not on the Dale-Chall familiar words list), a penalty is added to the score.

$ DaleChall = 0.1579 \times \left( \frac{\text{difficult words}}{\text{total words}} \times 100 \right) + 0.0496 \times \left( \frac{\text{total words}}{\text{sentences}} \right) $

$ \text{If difficult words} > 5\%: DaleChall = DaleChall + 3.6365 $

"Difficult words" are those not on the Dale-Chall list of familiar words.

Source: wikipedia

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

tqdm.pandas()

# Start time

start_time = time.time()

#@PipelineDecorator.component(return_values=["df"], name='Readability Scores', task_type=TaskTypes.data_processing)

def process_readability_scores(df):

import logging

import textstat

try:

df['flesch_kincaid_grade'] = df['text'].progress_apply(textstat.flesch_kincaid_grade)

df['gunning_fog'] = df['text'].progress_apply(textstat.gunning_fog)

df['coleman_liau_index'] = df['text'].progress_apply(textstat.coleman_liau_index)

df['smog_index'] = df['text'].progress_apply(textstat.smog_index)

df['ari'] = df['text'].progress_apply(textstat.automated_readability_index)

df['dale_chall'] = df['text'].progress_apply(textstat.dale_chall_readability_score)

return df

except Exception as e:

logger.error(f"Error in process_readability_scores: {e}")

raise

%timeit

df_essays = process_readability_scores(df_essays)

end_time = time.time()

duration = end_time - start_time

print(f"Process completed in {duration:.2f} seconds")

0%| | 0/4000 [00:00<?, ?it/s]

100%|██████████| 4000/4000 [00:04<00:00, 996.32it/s]

100%|██████████| 4000/4000 [00:03<00:00, 1116.60it/s]

100%|██████████| 4000/4000 [00:00<00:00, 4238.36it/s]

100%|██████████| 4000/4000 [00:03<00:00, 1005.83it/s]

100%|██████████| 4000/4000 [00:00<00:00, 4843.97it/s]

100%|██████████| 4000/4000 [00:03<00:00, 1072.18it/s]

Process completed in 17.10 seconds

categories_to_plot = [

'flesch_kincaid_grade', 'gunning_fog', 'coleman_liau_index', 'smog_index', 'ari', 'dale_chall'

]

condition = True # Set the condition here

plot_feature_distribution(df_essays, categories_to_plot,True)

ƒ(②) Semantic Density

Calculating Semantic Density: The function calculate_semantic_density

computes this metric by determining the ratio of meaning-bearing words (identified by tags in

mb_tags) to the total word count. A higher semantic density indicates a text that efficiently

uses words with substantial meaning.

import nltk

from nltk.tokenize import word_tokenize

import string

# Start time

start_time = time.time()

def process_semantic_density(df):

# Configure logging

logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(levelname)s - %(message)s')

def get_meaning_bearing_tags():

return {'NN', 'NNS', 'NNP', 'NNPS', 'VB', 'VBD', 'VBG', 'VBN', 'VBP', 'VBZ', 'JJ', 'JJR', 'JJS', 'RB', 'RBR', 'RBS'}

def tokenize_text(text):

try:

return word_tokenize(text.lower())

except TypeError as e:

logging.error(f"Error tokenizing text: {e}")

return []

def tag_words(words):

try:

return nltk.pos_tag(words)

except Exception as e:

logging.error(f"Error tagging words: {e}")

return []

def filter_words(tokens):

return [token for token in tokens if token.isalpha() or token in string.punctuation]

mb_tags = get_meaning_bearing_tags()

def process_row(text):

tokens = tokenize_text(text)

words = filter_words(tokens)

tagged = tag_words(words)

mb_words = [word for word, tag in tagged if tag in mb_tags]

full_sentence = " ".join(word + "/" + tag for word, tag in tagged)

density = len(mb_words) / len(words) if words else 0

return density, full_sentence

# Vectorized operations for DataFrame

df[['semantic_density', 'text_tagged_nltk']] = df['text'].progress_apply(lambda x: pd.Series(process_row(x)))

return df

%timeit

# run

df_essays = process_semantic_density(df_essays)

end_time = time.time()

# Calculate duration

duration = end_time - start_time

print(f"Process completed in {duration:.2f} seconds")

if(CFG.EXPLAIN_CODE):

explain_code(_i)

0%| | 0/4000 [00:00<?, ?it/s]

100%|██████████| 4000/4000 [01:22<00:00, 48.31it/s]

Process completed in 82.80 seconds

categories_to_plot = [

'semantic_density'

]

condition = True # Set the condition here

plot_feature_distribution(df_essays, categories_to_plot,True)

ƒ(⓷) Semantic Flow Variability

The model's approach, based on contrastive learning, is key to its effectiveness. It excels in distinguishing sentence pairs from random samples, aligning closely with the study's objective to analyze semantic flow.

import concurrent.futures

import logging

import nltk

import numpy as np

from sentence_transformers import SentenceTransformer

import torch

from tqdm import tqdm

tqdm.pandas()

import time

# Start time

start_time = time.time()

def process_semantic_flow_variability(df):

logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(levelname)s - %(message)s')

logger = logging.getLogger(__name__)

# Load a pre-trained sentence transformer model

model_MiniLM = 'all-MiniLM-L6-v2' #https://huggingface.co/sentence-transformers/all-MiniLM-L6-v2

try:

model = SentenceTransformer(model_MiniLM)

except Exception as e:

logger.error(f"Error loading the sentence transformer model: {e}")

model = None

def cosine_similarity(v1, v2):

return torch.dot(v1, v2) / (torch.norm(v1) * torch.norm(v2))

def semantic_flow_variability(text):

if not model:

logger.error("Model not loaded. Cannot compute semantic flow variability.")

return np.nan

try:

sentences = nltk.sent_tokenize(text)

if len(sentences) < 2:

logger.info("Not enough sentences for variability calculation.")

return 0

sentence_embeddings = model.encode(sentences, convert_to_tensor=True, show_progress_bar=False)

# Calculate cosine similarity between consecutive sentences

similarities = [cosine_similarity(sentence_embeddings[i], sentence_embeddings[i+1])

for i in range(len(sentence_embeddings)-1)]

return torch.std(torch.stack(similarities)).item()

except Exception as e:

logger.error(f"Error calculating semantic flow variability: {e}")

return np.nan

if df is not None and 'text' in df:

# with concurrent.futures.ThreadPoolExecutor() as executor:

df['semantic_flow_variability'] = df['text'].progress_apply(semantic_flow_variability)

else:

logger.error("Invalid DataFrame or missing 'text' column.")

return df

%timeit

df_essays = process_semantic_flow_variability(df_essays)

end_time = time.time()

# Calculate duration

duration = end_time - start_time

print(f"Process completed in {duration:.2f} seconds")

# Explain the code in the cell. Add this line to each cell

if(CFG.EXPLAIN_CODE):

explain_code(_i)

INFO:sentence_transformers.SentenceTransformer:Load pretrained SentenceTransformer: all-MiniLM-L6-v2

INFO:sentence_transformers.SentenceTransformer:Use pytorch device: cpu

20%|██ | 807/4000 [01:03<04:06, 12.94it/s]INFO:__main__:Not enough sentences for variability calculation.

77%|███████▋ | 3099/4000 [04:01<01:01, 14.60it/s]INFO:__main__:Not enough sentences for variability calculation.

87%|████████▋ | 3491/4000 [04:24<00:36, 13.97it/s]INFO:__main__:Not enough sentences for variability calculation.

100%|██████████| 4000/4000 [04:55<00:00, 13.52it/s]

Process completed in 296.17 seconds

categories_to_plot = [

'semantic_flow_variability'

]

condition = True # Set the condition here

plot_feature_distribution(df_essays, categories_to_plot,True)

ƒ(⓸) Psycholinguistic Features

Psycholinguistic Features encompass the linguistic and psychological characteristics evident in speech and writing. These features provide insights into the writer's or speaker's psychological state, cognitive processes, and social dynamics. Analysis in this domain often involves scrutinizing word choice, sentence structure, and language patterns to deduce emotions, attitudes, and personality traits.

The Linguistic Inquiry and Word Count (LIWC) [3] is a renowned computerized text analysis tool that categorizes words into psychologically meaningful groups. It assesses various aspects of a text, including emotional tone, cognitive processes, and structural elements, covering categories like positive and negative emotions, cognitive mechanisms, and more.

While LIWC is typically accessible through purchase or licensing, this project will employ Empath, an open-source alternative to LIWC, to conduct similar analyses.

from empath import Empath

import pandas as pd

import logging

# Initialize logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

# Create an Empath object

lexicon = Empath()

def empath_analysis(text):

try:

# Analyze the text with Empath and return normalized category scores

analysis = lexicon.analyze(text, normalize=True)

return analysis

except Exception as e:

# Log an error message if an exception occurs

logger.error(f"Error during Empath analysis: {e}")

# Return None or an empty dictionary to indicate failure

return {}

def apply_empath_analysis(df, text_column='text'):

"""

Apply Empath analysis to a column in a DataFrame and expand the results into separate columns.

"""

try:

df['empath_analysis'] = df[text_column].apply(empath_analysis)

empath_columns = df['empath_analysis'].apply(pd.Series)

df = pd.concat([df, empath_columns], axis=1)

df.drop(columns=['empath_analysis'], inplace=True)

return df

except Exception as e:

# Log an error message if an exception occurs

logger.error(f"Error applying Empath analysis to DataFrame: {e}")

# Return the original DataFrame to avoid data loss

return df

df_essays = apply_empath_analysis(df_essays)

# Explain the code in the cell. Add this line to each cell

if(CFG.EXPLAIN_CODE):

explain_code(_i)

columns_to_scale = ['help','office','dance','money','wedding','domestic_work','sleep','medical_emergency','cold','hate','cheerfulness','aggression','occupation','envy','anticipation','family','vacation','crime','attractive','masculine','prison','health','pride','dispute','nervousness','government','weakness','horror','swearing_terms','leisure','suffering','royalty','wealthy','tourism','furniture','school','magic','beach','journalism','morning','banking','social_media','exercise','night','kill','blue_collar_job','art','ridicule','play','computer','college','optimism','stealing','real_estate','home','divine','sexual','fear','irritability','superhero','business','driving','pet','childish','cooking','exasperation','religion','hipster','internet','surprise','reading','worship','leader','independence','movement','body','noise','eating','medieval','zest','confusion','water','sports','death','healing','legend','heroic','celebration','restaurant','violence','programming','dominant_heirarchical','military','neglect','swimming','exotic','love','hiking','communication','hearing','order','sympathy','hygiene','weather','anonymity','trust','ancient','deception','fabric','air_travel','fight','dominant_personality','music','vehicle','politeness','toy','farming','meeting','war','speaking','listen','urban','shopping','disgust','fire','tool','phone','gain','sound','injury','sailing','rage','science','work','appearance','valuable','warmth','youth','sadness','fun','emotional','joy','affection','traveling','fashion','ugliness','lust','shame','torment','economics','anger','politics','ship','clothing','car','strength','technology','breaking','shape_and_size','power','white_collar_job','animal','party','terrorism','smell','disappointment','poor','plant','pain','beauty','timidity','philosophy','negotiate','negative_emotion','cleaning','messaging','competing','law','friends','payment','achievement','alcohol','liquid','feminine','weapon','children','monster','ocean','giving','contentment','writing','rural','positive_emotion','musical']

plot_feature_distribution(df_essays, columns_to_scale,False) # too many, no need to view all.

ƒ(⓹) Textual Entropy

The standard method for calculating entropy is outlined below, which evaluates the unpredictability of each character or word based on its frequency. This approach is encapsulated in the formula for Shannon Entropy:

$\begin{aligned} H(T) &= -\sum_{i=1}^{n} p(x_i) \log_2 p(x_i) &&\quad\text{(Shannon Entropy)} \\ \end{aligned}$

Shannon Entropy quantifies the level of information disorder or randomness, providing a mathematical framework to assess text complexity.

import numpy as np

from collections import Counter

import logging

from tqdm import tqdm

tqdm.pandas()

import time

# Start time

start_time = time.time()

# Configure logging

logging.basicConfig(level=logging.INFO, format="%(asctime)s - %(levelname)s - %(message)s")

logger = logging.getLogger(__name__)

def calculate_entropy(text):

"""

Calculate the Shannon entropy of a text string.

Entropy is calculated by first determining the frequency distribution

of the characters in the text, and then using these frequencies to

calculate the probabilities of each character. The Shannon entropy

is the negative sum of the product of probabilities and their log2 values.

Args:

text (str): The text string to calculate entropy for.

Returns:

float: The calculated entropy of the text, or 0 if text is empty/non-string.

None: In case of an exception during calculation.

"""

try:

if not text or not isinstance(text, str):

logger.warning("Text is empty or not a string.")

return 0

# Calculating frequency distribution and probabilities

freq_dist = Counter(text)

probs = [freq / len(text) for freq in freq_dist.values()]

# Calculate entropy, avoiding log2(0)

entropy = -sum(p * np.log2(p) for p in probs if p > 0)

return entropy

except Exception as e:

logger.error(f"Error calculating entropy: {e}")

return None

%timeit

try:

df_essays["textual_entropy"] = df_essays["text"].progress_apply(calculate_entropy)

end_time = time.time()

duration = end_time - start_time

print(f"Process completed in {duration:.2f} seconds")

except Exception as e:

logger.error(f"Error applying entropy calculation to DataFrame: {e}")

end_time = time.time()

duration = end_time - start_time

print(f"Process completed in {duration:.2f} seconds")

if(CFG.EXPLAIN_CODE):

explain_code(_i)

100%|██████████| 4000/4000 [00:00<00:00, 6629.02it/s]

Process completed in 0.61 seconds

categories_to_plot = ['textual_entropy']

plot_feature_distribution(df_essays, categories_to_plot,True)

ƒ(⓺) Syntactic Tree Patterns

Syntactic Tree Pattern Analysis The analysis involves parsing essays into syntactic trees to observe pattern frequencies and patterns, focusing on AI-generated and human-written text differences. This process employs the Berkeley Neural Parser, part of the Self-Attentive Parser[5][6] suite. The code is designed to parse natural language texts, specifically our essay data, using Natural Language Processing (NLP) techniques.The function process_syntactic_tree_patterns is integral to this analysis. It utilizes

spaCy, benepar, and NLTK to dissect the syntactic structures of texts, calculating metrics like tree

depth, branching factors, nodes, leaves, and production rules. Additionally, it includes text analysis

features like token length, sentence length, and entity analysis.

Features

-

num_sentences: Counts the total number of sentences in the text, providing an overview of text segmentation. -

num_tokens: Tallies the total number of tokens (words and punctuation) in the text, reflecting the overall length. -

num_unique_lemmas: Counts distinct base forms of words (lemmas), indicating the diversity of vocabulary used. -

average_token_length: Calculates the average length of tokens, shedding light on word complexity and usage. -

average_sentence_length: Determines the average number of tokens per sentence, indicating sentence complexity. -

num_entities: Counts named entities (like people, places, organizations) recognized in the text, useful for understanding the focus and context. -

num_noun_chunks: Tallies noun phrases, providing insights into the structure and complexity of nominal groups. -

num_pos_tags: Counts the variety of parts of speech tags, reflecting grammatical diversity. -

num_distinct_entities: Determines the number of unique named entities, indicative of the text's contextual richness. -

average_entity_length: Calculates the average length of recognized entities, contributing to understanding the detail level of named references. -

average_noun_chunk_length: Measures the average length of noun chunks, indicating the complexity and composition of noun phrases. -

max_depth: Determines the maximum depth of syntactic trees in the text, a measure of syntactic complexity. -

avg_branching_factor: Calculates the average branching factor of syntactic trees, reflecting the structural complexity and diversity. -

total_nodes: Counts the total number of nodes in all syntactic trees, indicating the overall structural richness of the text. -

total_leaves: Tallies the leaves in syntactic trees, correlated with sentence simplicity or complexity. -

unique_rules: Counts the unique syntactic production rules found across all trees, indicative of syntactic variety. -

tree_complexity: Measures the complexity of the syntactic trees by comparing the number of nodes to leaves. -

depth_variability: Calculates the standard deviation of tree depths, indicating the variability in syntactic complexity across sentences.

These features collectively provide a comprehensive linguistic and structural analysis of the text, offering valuable insights into the syntactic and semantic characteristics of the processed essays.

import spacy

import benepar

import numpy as np

import pandas as pd

import logging

from collections import Counter

from nltk import Tree

from transformers import T5TokenizerFast

from tqdm import tqdm

tqdm.pandas()

import time

# Start time

#start_time = time.time()

# Configure logging

logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(levelname)s - %(message)s')

logger = logging.getLogger(__name__)

import traceback

def process_syntactic_tree_patterns(df_essays):

start_time = time.time()

"""

Process a DataFrame containing essays to extract various syntactic tree pattern features.

The function uses spaCy, benepar, and NLTK to analyze syntactic structures of text,

calculating various metrics such as tree depth, branching factors, nodes, leaves,

and production rules. It also includes text analysis features like token length,

sentence length, and entity analysis.

Args:

df_essays (pandas.DataFrame): DataFrame containing a 'text' column with essays.

Returns:

pandas.DataFrame: DataFrame with additional columns for each extracted syntactic and textual feature.

"""

tokenizer = T5TokenizerFast.from_pretrained('t5-base', model_max_length=512, validate_args=False)

try:

nlp = spacy.load('en_core_web_lg') # Gotta use en_core_web_lg to use benepar_en3 for spacy 3.0

# Just add the pipe.

# nlp.add_pipe("benepar", config={"model": "benepar_en3"})

if spacy.__version__.startswith('2'):

benepar.download('benepar_en3')

nlp.add_pipe(benepar.BeneparComponent("benepar_en3"))

else:

nlp.add_pipe("benepar", config={"model": "benepar_en3"})

except Exception as e:

logger.error(f"Failed to load spaCy model: {e}")

return df_essays

def spacy_to_nltk_tree(node):

if node.n_lefts + node.n_rights > 0:

return Tree(node.orth_, [spacy_to_nltk_tree(child) for child in node.children])

else:

return node.orth_

def tree_depth(node):

if not isinstance(node, Tree):

return 0

else:

return 1 + max(tree_depth(child) for child in node)

def tree_branching_factor(node):

if not isinstance(node, Tree):

return 0

else:

return len(node)

def count_nodes(node):

if not isinstance(node, Tree):

return 1

else:

return 1 + sum(count_nodes(child) for child in node)

def count_leaves(node):

if not isinstance(node, Tree):

return 1

else:

return sum(count_leaves(child) for child in node)

def production_rules(node):

rules = []

if isinstance(node, Tree):

rules.append(node.label())

for child in node:

rules.extend(production_rules(child))

return rules

def count_labels_in_tree(tree, label):

if not isinstance(tree, Tree):

return 0

count = 1 if tree.label() == label else 0

for subtree in tree:

count += count_labels_in_tree(subtree, label)

return count

def count_phrases_by_label(trees, label, doc):

if label == 'NP':

noun_phrases = [chunk.text for chunk in doc.noun_chunks]

return noun_phrases

else:

return sum(count_labels_in_tree(tree, label) for tree in trees if isinstance(tree, Tree))

def count_subtrees_by_label(trees, label):

return sum(count_labels_in_tree(tree, label) for tree in trees if isinstance(tree, Tree))

def average_phrase_length(trees):

lengths = [len(tree.leaves()) for tree in trees if isinstance(tree, Tree)]

return np.mean(lengths) if lengths else 0

def subtree_height(tree, side):

if not isinstance(tree, Tree) or not tree:

return 0

if side == 'left':

return 1 + subtree_height(tree[0], side)

elif side == 'right':

return 1 + subtree_height(tree[-1], side)

def average_subtree_height(trees):

heights = [tree_depth(tree) for tree in trees if isinstance(tree, Tree)]

return np.mean(heights) if heights else 0

def pos_tag_distribution(trees):

pos_tags = [tag for tree in trees for word, tag in tree.pos()]

return Counter(pos_tags)

def process_tree_or_string(obj):

if isinstance(obj, Tree):

return obj.height()

else:

return None

def syntactic_ngrams(tree):

ngrams = []

if isinstance(tree, Tree):

ngrams.extend(list(nltk.ngrams(tree.pos(), 2)))

return ngrams

for index, row in df_essays.iterrows():

text = row['text']

try:

doc = nlp(text)

trees = [spacy_to_nltk_tree(sent.root) for sent in doc.sents if len(tokenizer.tokenize(sent.text)) < 512]

trees = [tree for tree in trees if isinstance(tree, Tree)]

# Extract features

depths = [tree_depth(tree) for tree in trees if isinstance(tree, Tree)]

branching_factors = [tree_branching_factor(tree) for tree in trees if isinstance(tree, Tree)]

nodes = [count_nodes(tree) for tree in trees if isinstance(tree, Tree)]

leaves = [count_leaves(tree) for tree in trees if isinstance(tree, Tree)]

rules = [production_rules(tree) for tree in trees if isinstance(tree, Tree)]

rule_counts = Counter([rule for sublist in rules for rule in sublist])

# Text analysis features

num_sentences = len(list(doc.sents))

num_tokens = len(doc)

unique_lemmas = set([token.lemma_ for token in doc])

total_token_length = sum(len(token.text) for token in doc)

average_token_length = total_token_length / num_tokens if num_tokens > 0 else 0

average_sentence_length = num_tokens / num_sentences if num_sentences > 0 else 0

num_entities = len(doc.ents)

num_noun_chunks = len(list(doc.noun_chunks))

pos_tags = [token.pos_ for token in doc]

num_pos_tags = len(set(pos_tags))

distinct_entities = set([ent.text for ent in doc.ents])

total_entity_length = sum(len(ent.text) for ent in doc.ents)

average_entity_length = total_entity_length / num_entities if num_entities > 0 else 0

total_noun_chunk_length = sum(len(chunk.text) for chunk in doc.noun_chunks)

average_noun_chunk_length = total_noun_chunk_length / num_noun_chunks if num_noun_chunks > 0 else 0

ngrams = []

for tree in trees:

ngrams.extend(syntactic_ngrams(tree))

# Assign calculated feature values to the DataFrame

df_essays.at[index, 'num_sentences'] = num_sentences

df_essays.at[index, 'num_tokens'] = num_tokens

df_essays.at[index, 'num_unique_lemmas'] = len(unique_lemmas)

df_essays.at[index, 'average_token_length'] = average_token_length

df_essays.at[index, 'average_sentence_length'] = average_sentence_length

df_essays.at[index, 'num_entities'] = num_entities

df_essays.at[index, 'num_noun_chunks'] = num_noun_chunks

df_essays.at[index, 'num_pos_tags'] = num_pos_tags

df_essays.at[index, 'num_distinct_entities'] = len(distinct_entities)

df_essays.at[index, 'average_entity_length'] = average_entity_length

df_essays.at[index, 'average_noun_chunk_length'] = average_noun_chunk_length

df_essays.at[index, 'max_depth'] = max(depths) if depths else 0

df_essays.at[index, 'avg_branching_factor'] = np.mean(branching_factors) if branching_factors else 0

df_essays.at[index, 'total_nodes'] = sum(nodes)

df_essays.at[index, 'total_leaves'] = sum(leaves)

df_essays.at[index, 'unique_rules'] = len(rule_counts)

df_essays.at[index, 'most_common_rule'] = rule_counts.most_common(1)[0][0] if rule_counts else None

df_essays.at[index, 'tree_complexity'] = sum(nodes) / sum(leaves) if leaves else 0

df_essays.at[index, 'depth_variability'] = np.std(depths)

#df_essays.at[index, 'subtree_freq_dist'] = Counter([' '.join(node.leaves()) for tree in trees for node in tree.subtrees() if isinstance(node, Tree)])

df_essays.at[index, 'tree_height_variability'] = np.std([subtree_height(tree, 'left') for tree in trees if isinstance(tree, Tree)])

#df_essays.at[index, 'pos_tag_dist'] = pos_tag_distribution(trees)

#df_essays.at[index, 'syntactic_ngrams'] = ngrams

except Exception as e:

logger.error(f"Error processing text: {e}")

traceback.print_exc()

# Assign NaNs in case of error

# df_essays.at[index, 'num_sentences'] = np.nan

# ... Assign NaNs for other features ...

return df_essays

#%timeit

# Usage

try:

print("Step 7: process_syntactic_tree_patterns")

df_essays = process_syntactic_tree_patterns(df_essays)

end_time = time.time()

# Calculate duration

duration = end_time - start_time

except Exception as e:

logger.error(f"ERROR: process_syntactic_tree_patterns: {e}")

# Explain the code in the cell. Add this line to each cell

if(CFG.EXPLAIN_CODE):

explain_code(_i)

Step 7: process_syntactic_tree_patterns

You're using a T5TokenizerFast tokenizer. Please note that with a fast tokenizer, using the `__call__` method is faster than using a method to encode the text followed by a call to the `pad` method to get a padded encoding.

/Users/lange/anaconda3/envs/py39/lib/python3.9/site-packages/torch/distributions/distribution.py:53: UserWarning:

<class 'torch_struct.distributions.TreeCRF'> does not define `arg_constraints`. Please set `arg_constraints = {}` or initialize the distribution with `validate_args=False` to turn off validation.

/Users/lange/anaconda3/envs/py39/lib/python3.9/site-packages/torch/distributions/distribution.py:53: UserWarning:

<class 'torch_struct.distributions.TreeCRF'> does not define `arg_constraints`. Please set `arg_constraints = {}` or initialize the distribution with `validate_args=False` to turn off validation.

categories_to_plot = ['num_sentences','num_tokens','num_unique_lemmas','average_token_length','average_sentence_length','num_entities','num_noun_chunks','num_pos_tags','num_distinct_entities','average_entity_length','average_noun_chunk_length','max_depth','avg_branching_factor','total_nodes','total_leaves','unique_rules','tree_complexity','depth_variability']

plot_feature_distribution(df_essays, categories_to_plot,True)

def sanity_check():

columns_with_nan = df_essays.columns[df_essays.isna().any()].tolist()

nan_count = df_essays[columns_with_nan].isna().sum()

print(nan_count)

for column, count in zip(columns_with_nan, nan_count):

print(f"Column '{column}' has {count} NaN value(s).")

assert nan_count.sum() == 0, "NaN values found in the DataFrame."

print("There are no missing values in df_essays.")

df_essays.dropna(inplace=True)

sanity_check()

Series([], dtype: float64)

There are no missing values in df_essays.

# Create a deep copy so i can use the original df_essays later

df_essays_copy = df_essays.copy(deep=True) ## for now

from sklearn.preprocessing import MinMaxScaler, StandardScaler

def scale_columns(df, columns_to_scale, scaler=None, scale_type='MinMaxScaler'):

"""

Scale the specified columns in a DataFrame and add a suffix to the column names.

Args:

df (pandas.DataFrame): The DataFrame to scale.

columns_to_scale (list): List of column names to scale.

scaler (object, optional): Scaler object to use for scaling. If None, a new scaler object will be created.

scale_type (str, optional): The type of scaler to use. Default is 'MinMaxScaler'. Options: 'MinMaxScaler', 'StandardScaler'.

Returns:

pandas.DataFrame: The full DataFrame with scaled columns added.

pandas.DataFrame: A separate DataFrame with only the specified columns scaled.

object: The scaler object used for scaling.

"""

if scale_type == 'MinMaxScaler':

scaler = MinMaxScaler() if scaler is None else scaler

elif scale_type == 'StandardScaler':

scaler = StandardScaler() if scaler is None else scaler

else:

raise ValueError("Invalid scale_type. Options: 'MinMaxScaler', 'StandardScaler'")

scaled_columns = scaler.fit_transform(df[columns_to_scale])

scaled_df = pd.DataFrame(scaled_columns, columns=[col + '_scaled' for col in columns_to_scale])

full_df = pd.concat([df.drop(columns=columns_to_scale), scaled_df], axis=1)

return full_df, scaled_df, scaler

import joblib

columns_to_scale = ['flesch_kincaid_grade', 'gunning_fog', 'coleman_liau_index', 'smog_index', 'ari', 'dale_chall', 'textual_entropy', 'semantic_density', 'semantic_flow_variability']

readability_scaled_backin_df, readability_scaled_df, readability_scaler = scale_columns(df_essays_copy, columns_to_scale, scale_type='MinMaxScaler')

joblib.dump(readability_scaler, f'{CFG.SCRATCH_PATH}/scaler_semantic_features.pkl', compress=True)

['scratch/scaler_semantic_features.pkl']

columns_to_scale = ['help','office','dance','money','wedding','domestic_work','sleep','medical_emergency','cold','hate','cheerfulness','aggression','occupation','envy','anticipation','family','vacation','crime','attractive','masculine','prison','health','pride','dispute','nervousness','government','weakness','horror','swearing_terms','leisure','suffering','royalty','wealthy','tourism','furniture','school','magic','beach','journalism','morning','banking','social_media','exercise','night','kill','blue_collar_job','art','ridicule','play','computer','college','optimism','stealing','real_estate','home','divine','sexual','fear','irritability','superhero','business','driving','pet','childish','cooking','exasperation','religion','hipster','internet','surprise','reading','worship','leader','independence','movement','body','noise','eating','medieval','zest','confusion','water','sports','death','healing','legend','heroic','celebration','restaurant','violence','programming','dominant_heirarchical','military','neglect','swimming','exotic','love','hiking','communication','hearing','order','sympathy','hygiene','weather','anonymity','trust','ancient','deception','fabric','air_travel','fight','dominant_personality','music','vehicle','politeness','toy','farming','meeting','war','speaking','listen','urban','shopping','disgust','fire','tool','phone','gain','sound','injury','sailing','rage','science','work','appearance','valuable','warmth','youth','sadness','fun','emotional','joy','affection','traveling','fashion','ugliness','lust','shame','torment','economics','anger','politics','ship','clothing','car','strength','technology','breaking','shape_and_size','power','white_collar_job','animal','party','terrorism','smell','disappointment','poor','plant','pain','beauty','timidity','philosophy','negotiate','negative_emotion','cleaning','messaging','competing','law','friends','payment','achievement','alcohol','liquid','feminine','weapon','children','monster','ocean','giving','contentment','writing','rural','positive_emotion','musical']

psycho_scaled_df_backin_df, psycho_scaled_df, psycho_scaler = scale_columns(df_essays_copy, columns_to_scale, scale_type='MinMaxScaler')

joblib.dump(psycho_scaler, f'{CFG.SCRATCH_PATH}/scaler_psycho_features.pkl', compress=True)

['scratch/scaler_psycho_features.pkl']

columns_to_scale = ['num_sentences', 'num_tokens', 'num_unique_lemmas', 'average_token_length', 'average_sentence_length', 'num_entities', 'num_noun_chunks', 'num_pos_tags', 'num_distinct_entities', 'average_entity_length', 'average_noun_chunk_length', 'max_depth', 'avg_branching_factor', 'total_nodes', 'total_leaves', 'unique_rules', 'tree_complexity', 'depth_variability']

tree_feature_scaler_backin_df, tree_features_scaled_df, tree_feature_scaler = scale_columns(df_essays_copy, columns_to_scale, scale_type='MinMaxScaler')

joblib.dump(tree_feature_scaler, f'{CFG.SCRATCH_PATH}/scaler_tree_features.pkl', compress=True)

['scratch/scaler_tree_features.pkl']

final_features_df = pd.concat([readability_scaled_df,tree_features_scaled_df,psycho_scaled_df], axis=1)

print("Shape df_essays_copy: " + str(df_essays_copy.shape))

print("Semantic Features Scaled: " + str(final_features_df.shape))

#final_features_df.head()

Shape df_essays_copy: (3999, 227)

Semantic Features Scaled: (3999, 221)

if(CFG.CLEARML_ON):

# These are the final before uploading and starting the modeling process.

upload_dataset_from_dataframe(final_features_df,"training_with_features_scaled",

'LLM-detect-ai-gen-text-LIVE/dev/notebook/preprocess',

"Training Data with Features, Post the scaling",

["training_with_features_scaled","feature"],

"scratch/training_with_features_scaled.pkl")

upload_dataset_from_dataframe(df_essays_copy,"training_with_features",

'LLM-detect-ai-gen-text-LIVE/dev/notebook/preprocess',

"Training Data with Features, Before Scaling",

["training_with_features","feature"],

"scratch/training_with_features.pkl")

clearml_handler.task.get_logger().report_table(title='training_with_features_scaled',series='Train Essays Features',

iteration=0,table_plot=final_features_df)

clearml_handler.task.close()

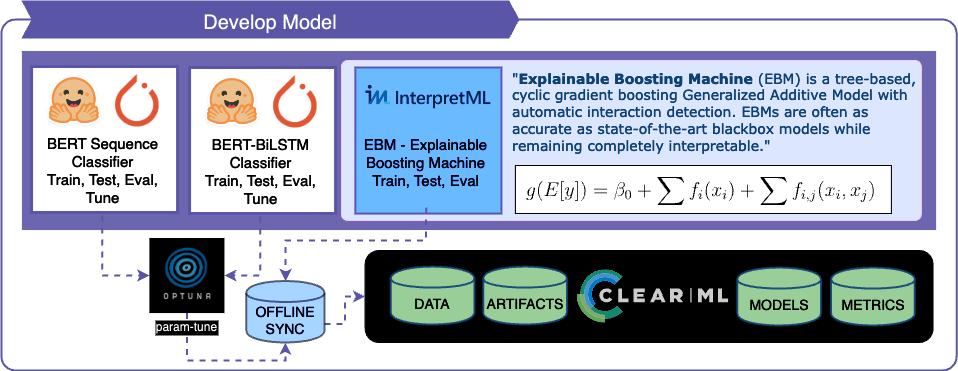

Model Overview

-

BertForSequenceClassification:

- Architecture: BERT (Bidirectional Encoder Representations from Transformers) for sequence classification. [4]

- Process: Involves data pre-processing, data loading, and training with hyperparameter optimization.

- Metrics: Trained and monitored using accuracy, precision, recall, F1 score, and AUC.

-

BertModel + BiLSTM:

- Architecture: The model is composed of the BertModel layer followed by BiLSTM layers. This is further connected to a dropout layer for regularization, a fully connected linear layer with ReLU activation, and a final linear layer for classification.

-

Explainable Boosting Machine (EBM) for Feature Classification:

- Type: Glass-box model, notable for interpretability and effectiveness.

- Function: Classifies based on extracted features from the essays.

- Configuration: Includes settings for interaction depth, learning rate, and validation size.

- Insights: Provides understanding of feature importance and model behavior.

- Causality This is a 'casual' model and the EBM is for helping dertermine causality aloong with our feature stats

Ensemble Approach

- Final Output Calculation: The outputs of each model are summed and then averaged to determine the ensemble's final output.

def sanity_check_2():

# Check the number of missing values

assert final_features_df.isnull().sum().sum() == 0, "There are missing values in final_features_df."

print("There are no missing values in final_features_df.")

# Check the data types

assert final_features_df.dtypes.unique().tolist() == [np.float64], "The data types of final_features_df are incorrect."

print("Data types of final_features_df are correct.")

# compare row count and assert on error

assert df_essays_copy.shape[0] == final_features_df.shape[0], "Row count mismatch between df_essays_copy and final_features_df"

print("Row count between df_essays_copy and final_features_df is correct")

# Check the range of values

# assert final_features_df.max().max() <= 1 and final_features_df.min().min() >= 0, "The values in final_features_df are not between 0 and 1."

# print("All values in final_features_df are between 0 and 1.")

sanity_check_2() # I had to take a week off after sanity_check_1() :)

There are no missing values in final_features_df.

Data types of final_features_df are correct.

Row count between df_essays_copy and final_features_df is correct

🤗 BERT for Sequence Classification

bert-base-uncased

Leveraging the 🤗 bert-base-uncased model, this section integrates the BertForSequenceClassification for efficient text sequence classification, combining BERT's advanced language processing with a streamlined, single-layer architecture for optimal simplicity and efficacy

Overview

- Model Type: BertForSequenceClassification, adapted from the pre-trained BERT model.

- Architecture: Integrates BERT's transformer layers with a single linear layer for classification.

- Design Advantage: Leverages BERT's advanced language understanding while ensuring efficiency in classification tasks.

Rationale

- Pre-trained Foundation: Utilizes the foundational BERT model pre-trained on a vast corpus. This approach leverages existing rich text representations, reducing the need for extensive training data and time.

- Simplicity and Efficiency Balance: Achieves a balance between simplicity and operational efficiency. The single linear layer addition to BERT's framework allows for effective handling of various classification tasks without overly complicating the model or extending training duration.

import torch

import pandas as pd

import numpy as np

from torch.utils.data import DataLoader

from transformers import BertTokenizer, BertForSequenceClassification, TrainingArguments

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score, roc_auc_score, confusion_matrix

import optuna

import logging

from torch.utils.tensorboard import SummaryWriter

import time

import random

# need to reconfig to error. with logging.INFO it's madness

logging.basicConfig(level=logging.ERROR)

logger = logging.getLogger(__name__)

model_path = CFG.BERT_MODEL

class TextDataset(torch.utils.data.IterableDataset):

def __init__(self, dataframe, tokenizer, max_length):

self.dataframe = dataframe

self.tokenizer = tokenizer

self.max_length = max_length

def __iter__(self):

for index, row in self.dataframe.iterrows():

text = row['text']

label = row['label']

# Encoding the text - BERT style!

encoding = self.tokenizer.encode_plus(

text,

add_special_tokens=True,

max_length=self.max_length,

padding='max_length',

truncation=True,

return_attention_mask=True,

return_tensors='pt'